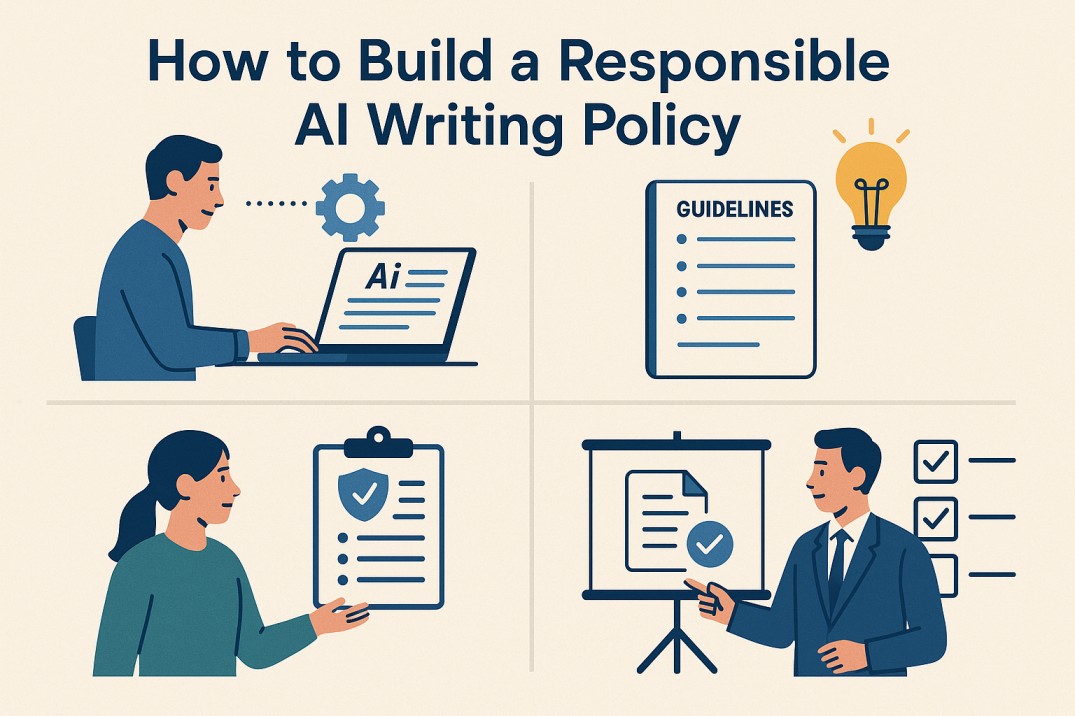

AI writing tools are quickly becoming part of everyday operations. From internal reports to client-facing content, these tools can increase efficiency, support brainstorming, and reduce time spent on repetitive writing tasks. But without clear boundaries, they also introduce risks, like inconsistency, poor-quality outputs, and potential misuse.

Companies must anticipate not only how employees will use AI, but also how they’ll search for solutions when unsure. A team member might look up phrases like need someone to write my paper when overwhelmed or unclear about expectations. Without guidance, they may turn to tools or platforms that fall outside your company’s standards.

A strong AI writing policy offers structure. It defines how AI can be used, where human oversight is required, and how to safeguard brand integrity, data security, and ethical communication. It gives teams clarity while encouraging responsible innovation.

Why AI Use Needs a Policy

AI-generated content can mimic human writing convincingly. That power comes with responsibility. When employees rely on AI tools without guidelines, the result may be inconsistent tone, unchecked errors, or even accidental misinformation.

A policy protects your company’s credibility. It outlines expectations, prevents misuse, and shows clients, partners, and stakeholders that your organization values thoughtful and ethical use of new technologies.

Set Clear Use Cases

Start by defining the scenarios where AI writing tools are appropriate. This should reflect your company’s specific needs, risk tolerance, and audience expectations.

For example:

- AI can support first drafts for blog posts, emails, and internal reports.

- It can summarize transcripts, outline presentations, or rephrase messages for clarity.

- It should not be used to respond to sensitive customer issues or produce legal documents.

Keep your list detailed. The more specific the guidance, the easier it is for teams to follow.

Common Approved Use Cases

- Drafting standard operating procedures

- Creating knowledge base articles

- Brainstorming ideas or campaign slogans

- Translating internal content for global teams

Each team should understand which tools are allowed and which tasks require human input from the start.

Define Human Review Standards

AI tools can speed up writing, but they cannot replace editorial judgment. Your policy should require human review for all externally published or client-facing content.

Assign responsibility. Decide who checks for:

- Factual accuracy

- Alignment with brand tone and messaging

- Bias, stereotypes, or vague claims

- Compliance with legal or regulatory standards

Make it clear that review is not optional. Teams must verify all AI output before sharing it with others.

Address Data Privacy and Security

Many AI platforms process text externally. If your team inputs confidential client data or sensitive business information, you could be exposing that content to third parties.

Your policy must include:

- A list of approved tools vetted for security

- Clear rules on what data may or may not be entered

- Internal procedures for anonymizing input when possible

Employees should understand that typing full names, financial details, or proprietary information into public tools creates risk.

Require Source Transparency

When AI tools contribute meaningfully to content, it’s important to be clear about how the material was created. This builds trust both internally and externally, especially when accuracy and authorship matter.

Not every use case needs disclosure. Internal notes, planning documents, or early drafts may not require explanation. But external-facing materials, like reports, web copy, or marketing assets, should reflect any AI involvement if it influences the final result.

Set standards for when and how to disclose AI assistance. Keep it simple and consistent, whether in footnotes, disclaimers, or internal records. Transparency helps prevent confusion about who is responsible for the final content and reinforces your organization’s commitment to ethical communication.

Control for Plagiarism and Duplication

AI models generate content by analyzing patterns from large datasets. Without careful review, they can produce language too close to existing sources. That opens the door to plagiarism, even if unintentional.

Use plagiarism checkers regularly. Encourage originality in prompts. Require that team members never use AI as a shortcut for copying public content, especially from competitors or high-authority sites.

Clarify that all final content must meet the company’s originality and quality standards, regardless of how it was drafted.

Maintain Your Brand Voice

Without direction, AI content can feel generic or inconsistent. Your policy should include brand voice guidelines tailored for AI use. That means outlining:

- Preferred sentence structures

- Tone preferences (e.g., formal vs. conversational)

- Words to avoid or highlight

- Sample copy that reflects ideal tone

Use your style guide as a foundation, but create an AI-specific addendum. This ensures that every AI-assisted message still feels like it came from your team and not a tool.

The Role of Training and Support

A successful AI writing policy doesn’t stop at documentation. It requires training.

Offer onboarding sessions or quick-reference materials that teach employees how to:

- Prompt AI effectively

- Recognize low-quality or biased output

- Edit for tone, clarity, and accuracy

- Follow company guidelines in real writing tasks

Encourage team leads to give feedback on AI-assisted drafts. Learning what works and what doesn’t builds judgment over time.

Vendor Selection Matters

If your team uses platforms with built-in AI (e.g., CRM tools, content platforms), your policy should also define criteria for selecting or approving these vendors.

This includes:

- Transparency in how the AI works

- Data storage and retention practices

- Integration with your existing content review process

- Alignment with your privacy and compliance standards

Choosing trusted tools is as important as using them responsibly. Many companies work with platforms that integrate writing assistance without exposing data or losing control over tone.

Monitor and Update Regularly

AI is evolving fast. So should your policy. Set a regular review schedule. Every six months is a good starting point.

Track where AI tools are being used across departments. Identify common pitfalls or repeat errors. Adjust the policy to reflect what’s working, and eliminate what’s not.

In some cases, tools advertised as an essay writing service, like DoMyEssay, may surface during employee searches for help. Your policy should address this directly. Make clear that public-facing academic services are not to be used for company tasks, especially in client communication or research preparation. The goal is to avoid dependency on platforms that fall outside approved practices.

Summary: Make AI Work for You

A responsible AI writing policy helps your company move fast without losing focus. It gives employees confidence, protects your brand, and supports innovation on your terms.

Rather than blocking AI tools entirely, guide how they’re used. Set rules that protect quality, privacy, and ethics. With the right structure in place, you can unlock the speed of AI without sacrificing trust, voice, or control.

When your team knows what’s allowed and why, they’ll write smarter, faster, and more responsibly.